AI Terminology in Practice (For All Leaders/ Managers Who Still Need to Decide)

If you’ve sat in a planning meeting lately, you’ve heard something like: ( The AI Buzzword)

“We need an LLM agent, powered by GenAI, deployed on the cloud, with a vector database… by next sprint.”

Everyone nods. Meanwhile, half the room is thinking: Wait—what do those words actually mean?

After 15 years of building products, here’s what I’ve learned the hard way: teams don’t fail because they lack AI. They fail because they lack shared language. When terminology is fuzzy, scope becomes fuzzy. Expectations drift. Budgets inflate. Timelines break.

So this is a practical, jargon-free guide to core AI terms—written for business leaders, product managers, program managers, and anyone who wants clarity without a computer science degree.

TL;DR: The 30-Second Cheat Sheet

- AI = the umbrella term: machines doing “smart” tasks

- ML = systems learn patterns from data to predict/decide

- Deep Learning = ML using neural networks with many layers

- Generative AI = creates new content (text/images/audio/video)

- LLM = deep learning model specialized in language

- SLM = smaller language model for simpler tasks at lower cost

- Transformer = architecture that made modern LLMs scale

- Parameters = the model’s internal “stored learning”

- Training vs Inference = learning phase vs using phase

- Agents = AI that plans steps and can take actions

The “Cost / Complexity” Reality Check (Leaders love this part)

Before definitions, here’s the framing most teams need:

| Tech | What it’s good at | Typical cost profile | Complexity / risk profile |

|---|---|---|---|

| ML (classic) | Predicting outcomes from your business data | Lower (internal compute + people) | Medium (data quality, drift) |

| Deep Learning | Images, speech, messy real-world patterns | Higher (compute + data) | Higher (harder debugging, infra) |

| LLMs / GenAI (API) | Language tasks: draft, summarize, extract, chat | Can get expensive (token usage) | Medium–High (quality variance, privacy) |

| SLMs | Classification, extraction, simple summarization | Lower (often 1/10th style economics) | Medium (must benchmark for accuracy) |

| Agents | Multi-step work across tools | High (latency + retries + tooling) | Highest (hallucinations, loops, action risk) |

Keep this table in mind as we go—because “what it means” matters less than what it will cost and how it can fail.

The Core Terms (Explained Like You’re Busy)

1) AI (Artificial Intelligence)

Textbook: Machines that exhibit intelligence.

In practice: The umbrella term for anything that feels “smart.”

AI includes:

- Machine Learning

- Deep Learning

- Generative AI

- Robotics

Daily-life example:

Google Maps rerouting you, Netflix recommendations, Face ID unlocking your phone—these are all AI in the real-world sense.

PM lens:

When someone says “AI,” ask:

- Are we predicting something, generating something, or taking actions?

That single question prevents most scope confusion.

2) ML (Machine Learning)

Textbook: A subset of AI that learns without explicit programming.

In practice: ML is pattern learning from past data to make predictions.

Daily-life example:

ML is like a barista learning your order. At first, they guess. Over time, they notice you always choose oat milk and less sugar—and they get it right.

Where ML shines:

- Fraud detection

- Churn prediction

- Demand forecasting

- Spam filtering

Cost/complexity note:

ML can be cheaper than LLM-heavy solutions if you already have strong internal datasets. The tradeoff is time: the hardest part is often data cleaning and alignment.

3) Deep Learning

Simple meaning: ML using neural networks with many layers.

In practice: Deep learning is the modern engine behind vision, speech, and many “wow” moments.

Daily-life example:

Recognizing a cat in a clear photo is easy. Recognizing a cat in low light, partially blocked, in motion—that’s deep learning territory.

Cost/complexity note:

Deep learning tends to be more compute-heavy, but it’s often the best option when data is complex (images, audio, video).

4) Generative AI (GenAI)

Simple meaning: AI that creates new content.

In practice: GenAI produces text, images, audio, video, and code from prompts.

Daily-life example:

GenAI is like a super-fast creative intern:

- You give a brief (“write a launch email in a friendly tone”)

- It drafts five versions in seconds

- You review before shipping

One sentence leaders need to hear: hallucinations

GenAI models are probabilistic, not deterministic—they generate the most likely answer, not the guaranteed correct answer. They’re not calculators. They’re “plausibility engines.”

Cost/complexity note:

GenAI is fast to prototype, but costs scale with usage. Without limits, inference bills can become a surprise line item.

5) Large Language Model (LLM) — and the 2026 twist: SLMs

Simple meaning: An LLM is a deep learning model trained to understand and generate language.

In practice: LLMs power chat and many text workflows.

Daily-life example:

Autocomplete predicts the next word.

An LLM predicts the next word with context, tone, and shockingly useful behavior.

What LLMs do beyond “chat”:

- Summarize long documents

- Extract key fields (dates, names, risks)

- Classify support tickets

- Draft responses

- Translate

The trust anchor (why hallucinations happen)

Because LLMs are probabilistic, they can sound confident even when wrong. That’s why production systems add verification: retrieval, constraints, approvals, and fallbacks.

PM Tip (very 2026):

You don’t always need a “Large” model. For simpler classification or extraction tasks, Small Language Models (SLMs) can do the job at a fraction of the cost—sometimes 1/10th—without sacrificing outcomes (as long as you benchmark them properly).

Cost/complexity note:

LLMs are often API-expensive at scale. Think:

(volume × tokens × model choice) = monthly bill.

Using SLMs for the “simple lanes” is one of the cleanest ways to control spend.

6) Transformer

Simple meaning: The architecture that made modern LLMs work at scale.

In practice: Transformers use “attention” to focus on what matters in long inputs.

Daily-life example:

In a long email thread, you don’t treat every sentence equally. Your brain highlights:

- “Deadline moved to Friday”

- “Budget approved”

- “Legal needs review”

That selective focus is the intuition behind attention.

7) Parameters

Simple meaning: The internal settings a model learns during training.

In practice: Parameters are the model’s stored instincts.

Daily-life example:

If the model were a chef, parameters are the chef’s learned taste—what usually works, what usually pairs, and what “Italian style” often looks like.

Reality check:

More parameters can mean more capacity—but it’s not a guarantee of better results for your business. Fit beats flex.

8) Training vs Inference (The Most Confused Pair)

Here’s the clean comparison you can paste into a program review doc:

| Feature | Training | Inference |

|---|---|---|

| Goal | Learning the patterns | Using the patterns |

| When it happens | One-time or periodic | Every time you run the model |

| Cost | Massive (compute + time) | Moderate to high (per use, scales with volume) |

| Analogy | Studying for the Bar Exam | Practicing law with clients |

| What can go wrong | Bad data → bad learning | Hallucinations, weak prompts, tool errors |

PM lens:

Most “surprise AI bills” come from inference, not training. If your feature goes viral, inference spend spikes fast.

9) Agents

Simple meaning: Systems that plan steps and can take actions.

In practice: Agents combine:

- an LLM/SLM “brain”

- a plan (steps)

- tools (APIs, search, databases)

- guardrails (rules, limits, approvals)

Daily-life example:

A chatbot answers your question.

An agent tries to solve your problem end-to-end.

- Chatbot: “Your order is delayed.”

- Agent: “I checked the carrier, opened a ticket, notified the customer, and updated the ETA.”

Cost/complexity note (important):

Agents often bring:

- High latency (many steps + tool calls)

- High cost (retries + longer context + more tokens)

- High risk (wrong actions that look “productive”)

PM lens:

If you deploy agents, add boundaries:

- step limits (prevent loops)

- approvals for irreversible actions

- audit logs

- fallbacks to humans or safer workflows

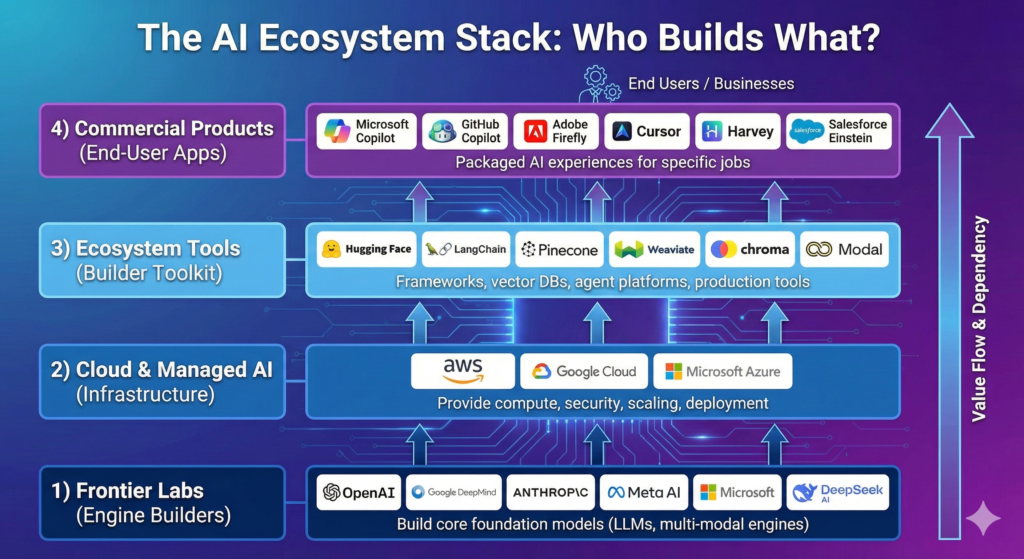

The AI Ecosystem (Who Builds What?)

Here’s the simplest model:

Frontier Labs (build the “engines”)

Examples: OpenAI, Anthropic, Google Gemini, Meta, Microsoft, Alibaba Cloud, DeepSeek AI

They build foundation models—the engines.

Cloud Providers & Managed AI (run and scale the engines)

Examples: AWS, Google Cloud (GCP), Microsoft Azure

They provide infrastructure: security, scaling, monitoring.

Ecosystem Tools (help you ship real products)

Examples like:

- Frameworks: Hugging Face, LangChain

- Agent platforms: LangGraph

- Vector databases: Chroma, Pinecone, Weaviate

- Production tools: Modal, Vellum

They help you go from demo → production.

Commercial Products (what end-users buy)

Examples: Microsoft Copilot, GitHub Copilot, Adobe Firefly, Cursor, Harvey, Salesforce Einstein (Health)

These are packaged AI experiences for specific jobs.

PM shortcut:

If a commercial product solves 80% of your need—buy. Build only when your differentiation is real (data, workflow, or domain).

A Simple Decision Guide (Predict vs Generate vs Act)

Use this in stakeholder meetings:

- If the job is predicting (risk, churn, fraud) → start with ML

- If the job is creating drafts/content → GenAI

- If the job is language understanding across documents → LLM or SLM

- If the job is multi-step execution → Agents (with guardrails)

- If the job is shipping reliably → production + monitoring matters more than model hype

FAQs

What’s an SLM and when should I use it?

A Small Language Model is often ideal for “simple lanes” like classification or extraction—cheaper, faster, and easier to run at scale (as long as accuracy is benchmarked).

Why do LLMs hallucinate?

Because they’re probabilistic generators, not deterministic calculators. They predict plausible outputs, which can include plausible nonsense.

Is GenAI the same as an LLM?

No. LLMs are GenAI for language. GenAI also includes image/audio/video models.

Are agents just fancy chatbots?

Not really. Agents plan + use tools + execute steps. That action layer is where value and risk live.

What should leaders measure first?

Not “coolness.” Measure: accuracy on real tasks, then cost per successful outcome followed by latency, then risk (wrong actions) and at last fallback success rate

Read Next:

- I explored how to work smarter using Chatgpt in AI workflows in daily life.

- Explained EU AI Act Phase 2 Policies for 2026 which every leaders should know.

Closing

You don’t need to memorize buzzwords to lead in AI. You need shared vocabulary so your team can answer one question clearly:

Are we predicting, generating, or acting—and what will it cost when this scales?

And in 2026, there’s a bonus question worth adding:

Can an SLM handle the simple lane before we pay LLM prices?