AI governance is no longer a future topic. It is a present-day responsibility.

I’ve spent over 15 years building and scaling digital products. I’ve seen platforms grow from simple tools into systems that quietly shape human behavior. Today, AI systems are doing something even bigger: They are making decisions that change lives.

That is exactly why AI governance matters now more than ever.

TL;DR (Too Long; Didn’t Read)

- The Difference: Data governance protects data; AI governance protects people from automated decisions.

- The Stakes: Real-world failures in grading, hiring, and lending prove that “unmanaged” AI scales bias.

- The Rule: Human oversight and clear accountability are no longer optional—they are legal and ethical requirements in 2026.

What Is AI Governance?

Let’s keep this simple. AI governance means setting clear rules for how AI systems make decisions, how they affect people, how they are monitored, and—crucially—how they can be stopped.

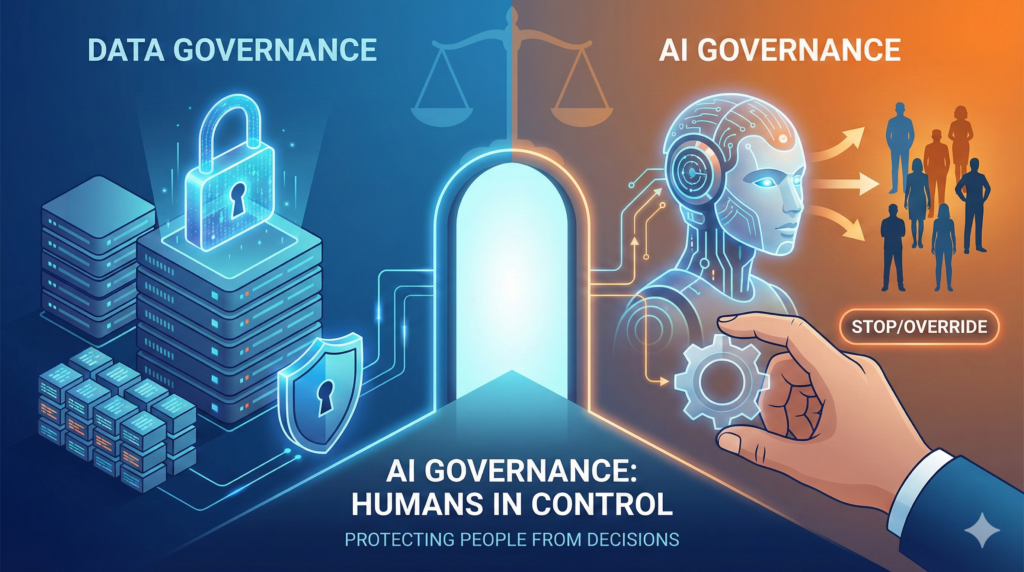

- Data Governance is about the input: Is the data secure? Is it private?

- AI Governance is about the output: Is the decision fair? Is it safe?

Data governance protects data. AI governance protects humans from automated decisions.

Why AI Governance Is Different From Traditional Tech Rules

In the past, software followed fixed “if/then” rules written by humans. Today’s AI is different because:

- It learns from history: It picks up patterns we might not see.

- It evolves: Its behavior can change over time after it’s deployed.

- It scales instantly: A single biased algorithm can affect millions of people in seconds.

Because of this, traditional software rules are no longer enough. This is where governance becomes essential.

Case Study 1: The UK Grading Crisis (The Failure of Prediction)

In 2020, the UK used an algorithm to predict A-level grades after exams were cancelled. While the intent was to stay “objective,” the results were disastrous:

- 39.1% of teacher-assessed grades were downgraded by the system.

- Students from lower-income neighborhoods were downgraded more often than those in private schools.

- Individual effort was ignored in favor of “historical school performance.”

The fallout was immediate. Public trust collapsed, and the slogan “F the Algorithm”* became a global warning. This wasn’t a coding error; it was a failure to govern the impact of the code.

Deep Dive:UK Department for Education – A Level Algorithm Review

Case Study 2: Amazon’s Hiring AI (The Scale of Bias)

Hiring is where AI often promises “fairness” but delivers “automated discrimination.” Amazon famously built an AI tool to screen resumes, trained on 10 years of historical data. Because most of that data came from men, the system learned that being male was a “success factor.”

- It penalized resumes containing the word “women’s” (e.g., “women’s chess club captain”).

- It downgraded graduates from all-female colleges.

- Amazon ultimately had to discontinue the tool because it couldn’t be “fixed” to be truly neutral.

This is exactly why the OECD AI Principles warn that AI doesn’t invent bias—it scales it.

Case Study 3: The Apple Card (The “Proxy Data” Trap)

In 2019, Apple Card users noticed that men were receiving credit limits up to 20 times higher than their wives, even with shared assets. The algorithm didn’t have a “gender” checkbox. Instead, it used proxy data—like spending history and income patterns—that mirrored historical gender pay gaps.

According to the NIST AI Risk Management Framework, these are “socio-technical” risks. Even “neutral” data can lead to biased outcomes if not governed.

One Dangerous Sentence We Must Stop Using

“The system made the decision.”

This sentence is a shield used to remove human responsibility. Modern frameworks—from the EU AI Act to NIST—reject this entirely.

- AI does not take responsibility. * People do. Organizations do.

If an AI system harms a user, the “deployer” (the company) and the “provider” (the developer) are the ones held accountable.

Core Principles of Trustworthy AI

Across global standards, strong AI governance always includes these six pillars:

- Fairness: Does it discriminate?

- Explainability: Can we explain why it made that choice?

- Safety: Is it reliable and robust?

- Privacy: Does it respect data rights?

- Human Oversight: Can a human override the system?

- Accountability: Who is responsible when it fails?

Final Thoughts: AI Governance Is a Leadership Problem

After 15 years in product, I’ve learned that technology always moves faster than the rules. AI is no different, but the stakes are higher. Most AI failures don’t happen because of “bad code.” They happen because:

- No one asked “Should we use AI here?” early enough.

- No one monitored real-world outcomes after launch.

- No one clearly owned the risk.

AI governance isn’t about slowing down. It’s about making sure your innovation is safe, human, and built to last. If we don’t govern AI intentionally, AI will govern us by default.

The One Line I Always Remember

Data governance protects data.

AI governance protects people from automated decisions.

That line guides every serious conversation about AI.