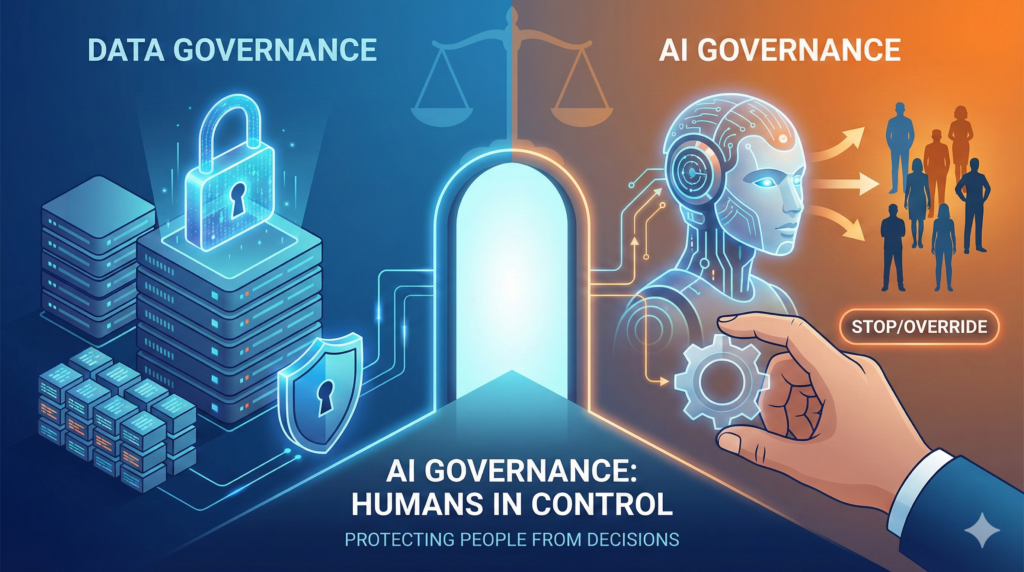

AI Governance in 2026: Why Algorithms Now Need Human Rules

AI governance is no longer a future topic. It is a present-day responsibility. I’ve spent over 15 years building and scaling digital products. I’ve seen platforms grow from simple tools into systems that quietly shape human behavior. Today, AI systems are doing something even bigger: They are making decisions that change lives. That is exactly […]

AI Governance in 2026: Why Algorithms Now Need Human Rules Read More »