EU AI Act Phase Two: What Startups Must Change Before 2026

If you’re building or using AI in Europe, EU AI Act Phase Two is where regulation becomes unavoidable. What once sounded like distant policy is now turning into enforceable rules—with deadlines, audits, and serious penalties attached.

From AI-powered hiring tools to large language models, EU AI Act Phase Two directly impacts how AI systems are trained, deployed, and monitored. For startups especially, waiting too long can mean rushed compliance, delayed launches, or fines that can reach 7% of global annual revenue.

This guide breaks down EU AI Act Phase Two into five simplified, practical rules so startups know exactly what to change before enforcement begins.

Executive Summary

EU AI Act Phase Two marks the shift from policy promises to operational enforcement.

While Phase One (early 2025) focused on banning “unacceptable-risk” uses—such as social scoring and emotion recognition in schools—EU AI Act Phase Two focuses on how AI systems actually work in the real world.

This phase introduces enforceable obligations for:

- General-Purpose AI (GPAI) models

- Transparency and watermarking for generative AI

- High-risk AI systems used in sensitive areas like hiring, finance, and healthcare

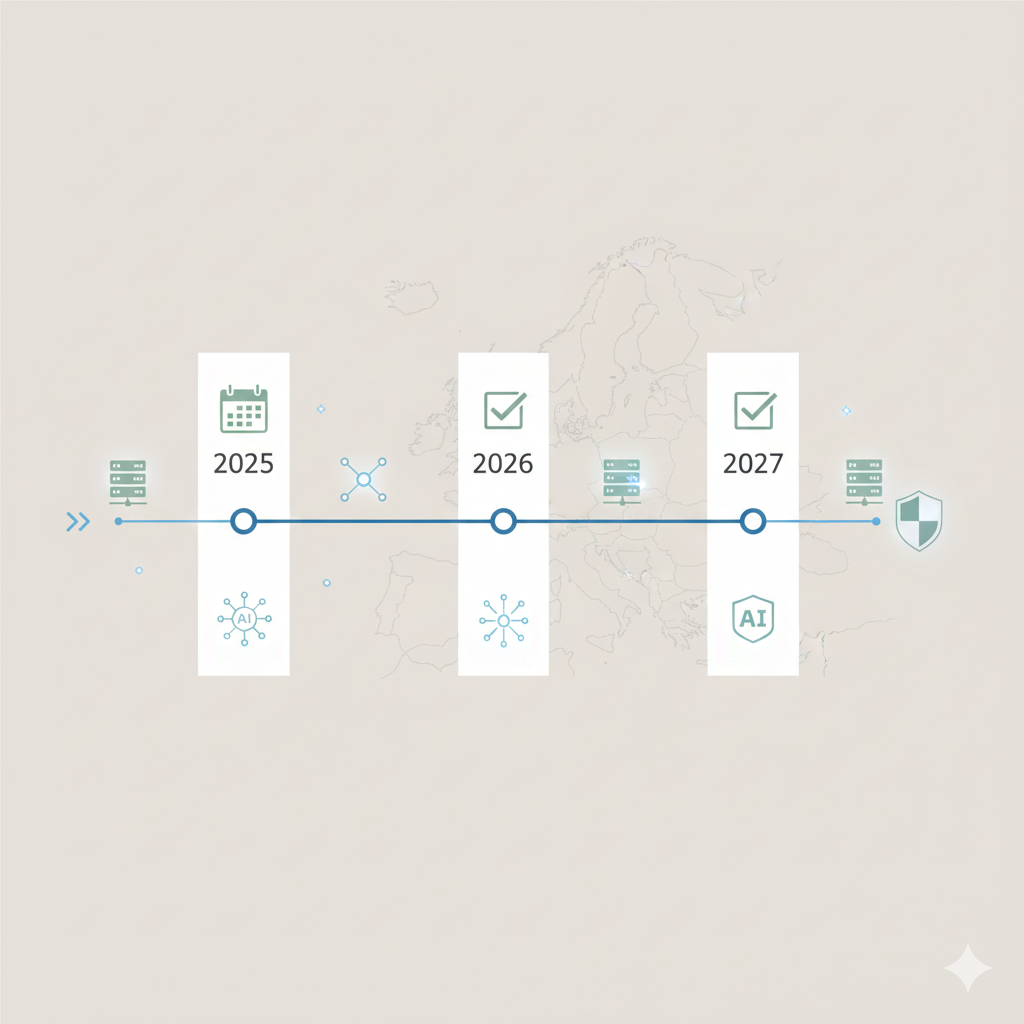

Key dates startups must know:

- August 2025: GPAI transparency rules take effect

- December 17, 2025: First EU Code of Practice on AI transparency is issued

- August 2026: Compliance deadline for most high-risk AI systems

- August 2027: Extended deadline for AI embedded in regulated products (e.g. medical devices)

Non-compliance can result in fines of up to 7% of global annual turnover (European Commission).

TL;DR — EU AI Act Phase Two in Simple Terms

- EU AI Act Phase Two is about enforcement

- GPAI and generative AI face new transparency rules

- High-risk AI systems must comply starting August 2026

- Some regulated products have until August 2027

- Human oversight and data quality are now legal requirements

- Early preparation gives startups a real advantage

What Is EU AI Act Phase Two (And Why Startups Should Care)

The era of “voluntary AI safety” is over.

As of late 2025, EU AI Act Phase Two defines the minimum standards for legally operating AI in the European Single Market. Unlike Phase One, which removed clearly harmful use cases, this phase regulates how AI systems are designed, documented, and supervised over time.

EU AI Act Phase Two focuses on:

- Model training and documentation

- Disclosure of AI-generated content

- Ongoing risk monitoring and governance

Whether you’re an AI Provider building models or a Deployer integrating AI into a product, compliance is now a baseline requirement, not a competitive extra.

👉 Internal reading:

AI Regulation & Compliance Trends Every Startup Should Know

https://www.theautomationstrategist.com/ai-compliance-risk-governance

The 5 Simplified Rules of EU AI Act Phase Two

1. Correctly Identify Your AI Risk Tier

Under EU AI Act Phase Two, classification is everything.

AI systems are grouped by risk, and each category determines your obligations.

- GPAI providers:

Developers of general-purpose models (including LLMs) must provide technical documentation to downstream users. - Systemic-risk models:

If your model was trained using more than 1025 FLOPs, it faces enhanced oversight, including red-teaming, incident reporting, and risk mitigation.

In practice:

Many startups fail compliance simply by misclassifying their AI.

📎 External reference:

EU Commission – General-Purpose AI Models

2. Automate Transparency and Disclosure

Transparency is central to EU AI Act Phase Two.

The December 17, 2025 draft Code of Practice recommends a multilayered transparency approach, combining:

- Technical metadata or watermarking, and

- Visible indicators or icons that clearly signal AI-generated content

Users must also be informed when interacting with AI systems such as chatbots or virtual assistants.

In practice: If a user can’t reasonably tell they’re engaging with AI, you’re already at risk.

3. Prepare Early for High-Risk AI Compliance

High-risk AI systems include those used in:

- Hiring and recruitment

- Credit scoring and lending

- Education and exams

- Healthcare and critical infrastructure

Compliance deadlines under EU AI Act Phase Two:

- Annex III systems (HR, education, credit): August 2026

- AI embedded in regulated products (medical devices, machinery): August 2027

High-risk systems must implement:

- A Quality Management System (QMS)

- A Risk Management System

- Lifecycle monitoring, not just pre-launch checks

In practice:

Compliance is continuous—not a one-time certification.

4. Prove Data Integrity and Governance

Under EU AI Act Phase Two, regulators now audit how your data was sourced, cleaned, and maintained.

Your datasets must be:

- Relevant and representative

- Properly documented

- As bias-free as technically possible

In practice:

Poor training data is no longer just a technical problem—it’s a legal one.

5. Keep Humans in Control

Fully autonomous AI systems are treated as high liability under EU AI Act Phase Two.

High-risk systems must allow:

- Human intervention

- Decision overrides

- Real-time shutdown

In practice:

“Set it and forget it” AI deployments are incompatible with EU law.

EU AI Act Phase Two: Compliance Timeline

| Milestone | Date |

|---|---|

| Unacceptable-risk bans | Feb 2025 |

| GPAI transparency rules | Aug 2025 |

| Code of Practice issued | Dec 2025 |

| Annex III high-risk deadline | Aug 2026 |

| Regulated product deadline | Aug 2027 |

Free Downloadable Resource

Get the EU AI Act Phase Two Audit Template

A 15-point compliance checklist built specifically for Seed to Series B startups.

This practical audit template helps you:

- Track data governance requirements

- Document human-in-the-loop workflows

- Identify high-risk system gaps early

- Prepare for audits by EU and national AI authorities

👉 Download the EU AI Act Phase Two Audit Template

(Free – designed for startup teams, not lawyers)

Final Thoughts: Why EU AI Act Phase Two Is a Strategic Moment

EU AI Act Phase Two is not about slowing startups down. It’s about making AI auditable, accountable, and trustworthy at scale.

One final practical tip: enforcement is becoming local. Since December 2025, EU Member States have designated National AI Authorities. Bodies such as France’s CNIL and Ireland’s Coimisiún na Meán are already issuing country-specific templates and disclosure guidance that go beyond EU-level text.

Checking your local regulator early can save months of compliance rework later.

📎 External references:

In the AI economy, compliance is not paperwork — it’s strategy.

And under EU AI Act Phase Two, startups that prepare in 2025 will scale with confidence in 2026 and beyond.

Frequently Asked Questions:

Need Help With AI Compliance?

I work with teams to build AI systems that are efficient, auditable, and regulation-ready. If you’re looking for practical support — not theory — feel free to reach out.

Pingback: AI Hallucination: How to Build Trusted AI Systems in 2026

Pingback: AI Governance in 2026: Why Algorithms Now Need Human Rules